Since February 2011, I’ve had the opportunity to help a number of companies deal with Panda hits. As part of that work, I’ve assisted several large-scale websites (sites with more than 1 million pages).

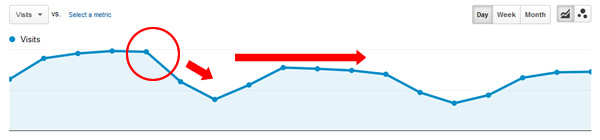

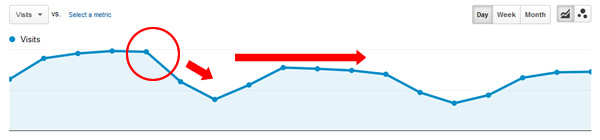

Many of those companies got blindsided by Panda, as they mistakenly saw the misleading surge in traffic as a positive signal SEO-wise. Unfortunately, that sinister surge led to Google seeing a boatload of poor user engagement due to thin content, duplicate content, tech problems causing content issues, etc.

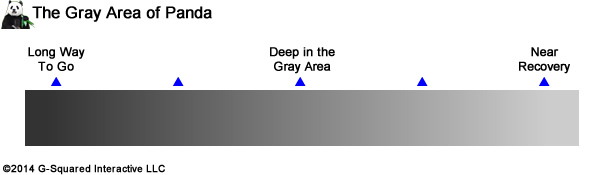

Poor user engagement is Panda’s best friend. Low dwell time in particular can be a killer for sites in the gray area of Panda. That gray can turn black in a hurry.

The Panda Trouble with Large-Scale Websites

As I conduct comprehensive audits through the lens of Panda, companies that have been impacted gain solid look at the various problems they need to fix. Addressing those problems can be completed in the short-term, and that can help those companies recover.

But this post is about the long-term danger that large-scale websites face from a Panda standpoint. Sure, the technical and content teams I’m helping are often very smart and can usually implement changes at a fairly rapid pace. But the long-term problem is upstream from the grunt work. You see, the problem is the site itself.

Large-scale websites with more than 1 million pages often have complex site structures, many sections, multiple subdomains, etc. All of this leads to a tough situation when you’re trying to break out of the Panda filter.

For example, problems can easily roll out that impact parts of a site you aren’t necessarily focused on. And if you’re focusing on problems A, B, and C, but new problems like X, Y, and Z arise, then you might miss dangerous Panda-inducing issues.

It isn’t fun when you have serious content problems pop up across a site. That’s especially the case when you find out about those problems after a Panda update rolls out.

Surprise! Your Order for Panda Food Just Arrived

If that happens, the site could easily stay in the gray area of Panda, never recovering from the downturn in traffic (if it’s been hit already). Or, it might only recover to a fraction of where it should recover.

All of this can lead to serious webmaster frustration. Or worse, a site that’s battling Panda might actually see a downturn after previously seeing a recovery or partial recovery. It can easily take the wind out of a marketing team’s sails.

This post will cover an actual example of new and hidden problems causing a second Panda hit. Then I’ll provide some recommendations for making sure this situation doesn’t happen to you.

The problem I’ll cover caused serious problems from a content-quality standpoint and lured Panda back from the Google bamboo forests. It’s a great example that demonstrates the unique challenges of Panda-proofing large-scale websites.

A Quick Snapshot of the Panda Victim

The website in question has battled Panda for about one year. It’s a complex website with several core sections, multiple subdomains, and various teams working on content. It has a long history, which also brings a level of complexity to the situation.

For example, my client barely remembered certain dangerous pieces of the site that were uncovered during audits I conducted. Yes, large-scale websites can contain skeletons content-wise.

The site was chugging along nicely when it first got hammered by Panda. That’s when the company hired me to help rectify the situation.

After several audits were conducted (both comprehensive and laser-focused), a number of changes were recommended. My client was ready to rock and roll and they implemented many changes at a rapid pace.

The site ended up recovering a few months later and actually surged past where they were previously from a Google Organic perspective. That’s not often the case, so it was awesome to see.

The Gray Area of Panda

But about six months later, another Panda update rolled out and surprisingly tore a hole in the hull of the website, letting about 30 percent of Google organic traffic pour out.

My client was surprised, deflated, and confused. Why would they get hit again? They worked so hard to recover, surged in traffic, and all was good.

Why would the mighty Panda chew them up like a tasty piece of bamboo? Good question, and it was my job to find answers.

I’ve written several times about the maddening gray area of Panda. It’s a lonely place that confuses webmasters to the nth degree.

Here’s the trouble with the gray area. Most websites have no idea how close they are to getting hit by Panda. And on the flip side, companies negatively impacted by Panda don’t know how far in the gray area they sit (not knowing how close they are to recovery).

It’s one of the reasons I’m extremely aggressive when helping companies with Panda recovery. I’d rather have a client recover and then build upon a clean platform than struggle with Panda, never knowing how close they are to recovery. That’s a maddening and frustrating place to live.

This client was walking a fine line between recovery and impact. And that was due to the complex nature of the site, the long history it has, and the various types of content it provides.

This mixture had taunted the Panda one too many times. And now twice in a year, the site got hit (once extremely hard and the other being a moderate hit). Regardless, both have impacted traffic and revenue.

Audits Reveal a Battery of New Problems, But One Big One Caught My Eye

So I dug in. I put on my battle gear and jumped into yet another serious Panda audit.

My hope was that I would uncover a smoking gun. Remember, this site is in continual flux, with new content being added on a regular basis (and across several sections). And then there was a long history of content production prior to me helping them. So we were fighting a Panda battle on several fronts.

I wasn’t sure what I would find during my audit, but I was looking for areas of the site that would attract Pandas. For example, low quality content, duplicate content, thin content, affiliate content, scraped content, technical problems causing content-related issues, etc.

My audit yielded a number of problems that needed to be rectified (and fast). The fact that I found a number of new problems underscores the challenges that large-scale websites face.

Remember, this is a site I already helped once with Panda recovery. Knowing that a number of changes were rolled out that could cause Panda problems again was tough to swallow. More about how to avoid this later in the post.

The one issue that stood out among the rest caused a serious problem content-quality wise. And I think that problem heavily contributed to Panda stomping on the site again.

Upon analyzing the site manually, it wasn’t easy to pick up the issue. But when combined with a crawl analysis (crawling a few hundred thousand pages), the problem was readily apparent.

Webmaster-Induced Search Results Pages = Unlimited Thin Pages to Crawl and Index

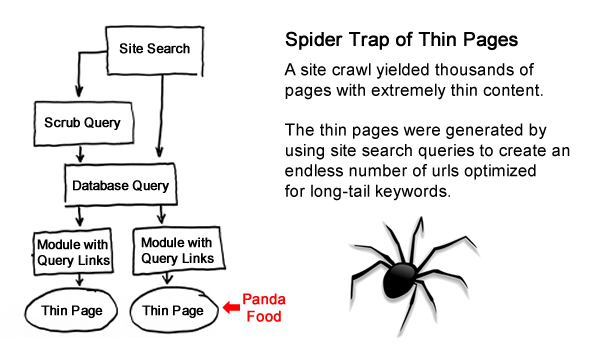

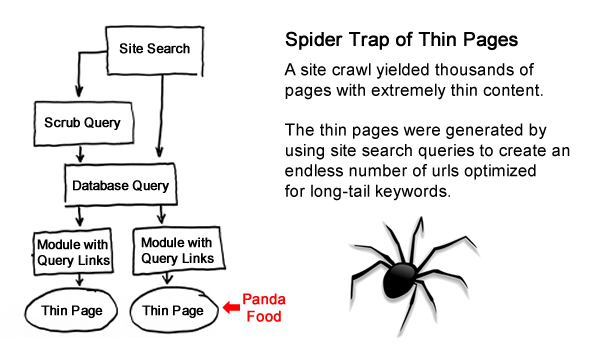

During a crawl analysis, I found thousands of extremely thin pages in one section of the site. The pages contained just a sentence or two, and sometimes less. Having thousands of these pages crawled and indexed was extremely problematic.

Upon checking out the pages, I was scratching my head wondering how they were created in the first place. I initially thought this could be a technical problem, where a glitch ends up creating an endless number of thin pages (which I have seen before). But once I dug into the data, it was clear how the pages were being generated.

It ends up that user-generated searches on the site were being captured and then listed on related pages (as hyperlinks). The links led to new pages that contained a small amount of content about that search (and often linked to even more search-related pages).

We had a perfect storm of endless user queries that could generate pages with very little content. And those pages linked to more search-related pages. So on and so forth. This all led to massive amounts of Panda food on the site.

Needless to say, I presented the findings to my client as quickly as possible. The good news is that they moved fast. The tech team knocked out the work within a week. And as I explained earlier, there were other content-related changes I recommended as well. But most were not as severe as the unlimited number of thin pages I uncovered.

Panda and the Waiting

So now my client must wait as Google recrawls the site, evaluates the overall quality of content, etc.

This highlights another issue with Panda attacks. You could possibly recover quickly (within few months), or it could be much longer. For example, I had a client that did everything right and waited six months to recover. That’s a long time to wait for a solid website that fully corrected recent content quality problems. Just when they almost gave up, they bounced back (and in a strong way). But they sat in the gray area for a long time.

This is why I tell companies wanting to push the limits of Panda not to. If they get hit, they might not recover so fast (or at all). It’s simply not worth it to test the Panda waters.

Recommendations for Avoiding Unwanted Panda Problems on Large-Scale Websites

I wanted to explain the example above so you would get a good feel for some of the challenges that large websites face. And based on my work helping large and complex websites with Panda, I have provided several recommendations below for avoiding situations like the one I explained above.

Hopefully you can start implementing some of the recommendations quickly (like this week). And if you do, you can hopefully keep the Panda at bay. And that’s a good thing considering the impact it could have on your business.

1. Continually Audit the Website

Initial audits can be extremely powerful, but once they are completed, they can’t help you analyze future releases. To combat this situation, I recommend scheduling audits throughout the year. That includes a mixture of crawl audits, manual analysis, and auditing webmaster tools reporting.

Continually auditing the site can help nip problems in the bud versus uncovering them after the damage has been done.

2. Google Alerts for the Technical SEO

From an SEO standpoint, it’s critically important to know the release schedule and understand the changes being rolled out on a website. As the person or agency helping a company SEO-wise, new content or functionality being released to production could either help or hurt your efforts.

With large and complex websites, knowing all the changes being rolled out is no easy feat. I recommend speaking with the tech team about getting notified before each release is pushed to production.

Think of this as Google Alerts for the technical SEO. They just might help you catch problems before they cause too much damage.

3. Gain Access to the Test Environment

My February post explained the power of having access to staging servers. Well, it can help with Panda protection as well.

Understanding the release schedule is one thing, but being able to crawl and test all changes prior to them going live is another. I highly recommend gaining access to your client’s test server in order to check all changes before they are pushed to production.

When I’m given access to test servers, I catch problems often. But the good news is the changes can’t hurt anything SEO-wise while sitting on a test server.

As long as those problems are fixed prior to being pushed to production, then your client will be fine. Work hard to gain access. It’s definitely worth it.

4. Educate Clients

I’ve seen a direct correlation between the SEO health of a website and the amount of SEO education I have provided clients. For example, the more I train tech and content teams SEO-wise, the less chance they have of mistakenly rolling out changes that can negatively impact organic search performance.

Developers are smart, but sometimes too smart. They can get themselves in trouble SEO-wise by coding a site into SEO oblivion. You can avoid this by providing the right training.

5. Create an Open Environment for Tech Questions

Along the same lines, I try and keep an open communication channel with any person that can impact a website I’m working on.

I want clients to email me with questions about ideas they have, topics they are confused about, content they are thinking about developing, questions about link building, etc.

Similar to the SEO training I mentioned above, quickly answering those questions can go a long way to avoiding a nasty Panda bite (or Penguin if the questions are link-focused).

6. Be an Extension of the Engineering Team

Change is constant with many large-scale websites. This is why it’s extremely important to help clients engineer new sections, functionality, applications, etc.

If you’re involved from the beginning, you can make sure all new projects pass the SEO test. I can’t tell you how many times I have caught issues in the beginning stages of a project that would have caused serious SEO problems down the line.

If you can, I recommend being involved from the conceptual stages of a project and following that project all the way through to production. Guide the project, crawl it, analyze it, and refine it. That’s the only way to ensure it won’t hurt you down the line.

Summary – Avoid Costly Rollouts to Avoid The Mighty Panda

There are many benefits to having large-scale websites, but at the same time, their size and complexity provide unique challenges. There are often many moving parts to keep track of, several core teams working on the site, and multiple sections and subdomains to monitor.

Due the complex nature of large-scale SEO, it’s easy roll out changes that can negatively impact a website. That’s why it’s ultra-important to take the necessary steps to protect the site from unwanted problems.

Each month, companies that are Panda-susceptible must face the music. Panda rolls out once per month and can take 10 days to fully roll out.

If your large and complex website is in the gray area of Panda, then you need to pay particular attention to all changes rolling out on the site. If the scales tip in the wrong direction, then you could be dealing with months of recovery. I recommend following the steps listed earlier to avoid that happening.

Make your site the anti-bamboo. Good luck.