Move Over Google Knowledge Graph, Here Comes Knowledge Vault

Google has been talking about the Knowledge Vault, an upgraded Knowledge Graph that touts "automatic knowledge base construction" and doesn't rely on community-curated databases.

Google has been talking about the Knowledge Vault, an upgraded Knowledge Graph that touts "automatic knowledge base construction" and doesn't rely on community-curated databases.

The Knowledge Graph is well known throughout the SEO community as a knowledge base that collects data from a variety of sources across the Web to enhance its search results. But will its technology be “old news” soon? Enter the Knowledge Vault, the brainchild of Google and a sort-of “Knowledge Graph on steroids” that could soon be a reality for search.

Per a report in New Scientist, the Knowledge Vault is different from the Knowledge Graph in that it doesn’t rely on “crowdsourcing” for its information. From the report:

This existing base, called Knowledge Graph, relies on crowdsourcing to expand its information. But the firm noticed that growth was stalling; humans could only take it so far. So Google decided it needed to automate the process. It started building the Vault by using an algorithm to automatically pull in information from all over the web, using machine learning to turn the raw data into usable pieces of knowledge.

Google has been alluding to the Knowledge Vault for some time. Take this presentation from Google in 2013. In that presentation, Google points out that the Knowledge Graph is fueled by Freebase.com, a community-curated database that merges many data sources; and while “Freebase is large,” the presentation says it’s “still very incomplete,” and “we need automatic knowledge base construction methods.”

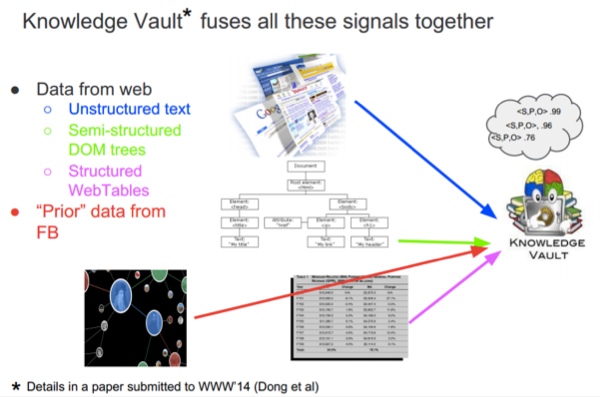

The presentation then goes on to explain how Google is building a more complete knowledge base, and that’s through the Knowledge Vault, which pulls together a borage of signals. From the presentation:

There are many academic groups (e.g., CMU, UW, MPI) that have developed methods to extract facts from large text corpora. At Google, we have developed a similar system, except it is 10x bigger. In addition, we use “prior knowledge” to help reduce the error rate.

This paper published by Google goes into more detail about the concepts behind the Knowledge Vault, citing three major components:

- Extractors: These systems extract triples from a huge number of Web sources. Each extractor assigns a confidence score to an extracted triple, representing uncertainty about the identity of the relation and its corresponding arguments.

- Graph-based priors: These systems learn the probability of each possible triple, based on triples scored in an existing KB (knowledge base).

- Knowledge fusion: This system computes the probability of a triple being true, based on agreement between different extractors and priors.

AJ Kohn of SEO and marketing consultancy Blind Five Year Old commented on the details outlined in the Knowledge Vault paper, saying it shows “the various ways in which Google is extracting entities and how they validate them using prior knowledge. The real value of the process outlined is in the combination of different methods of extraction and validation to produce a far more robust and reliable database of entities.”

He adds, “In short, Google’s found a way to build a larger and more reliable Knowledge Graph. Google is harnessing all of the entity applications and essentially triangulating the ones that make sense.”

What this means for the future of search optimization, marketers will have to wait and see. Kohn recently wrote about Knowledge Graph optimization, and says that there are some takeaways in the details of the Knowledge Vault information.

“If you dig into the details, there are some interesting takeaways. First is that the markup that websites are actively using (i.e., schema.org) are a very small part of total extractions and produce far fewer reliable entity facts,” Kohn says. “The vast majority instead come from Google crawling the HTML tree of sites – the unstructured data that they then turn into something sensible.”

Does that mean we should stop using structured data?

Kohn says no: “I believe it’s critical to do so if you want to participate in the future of search. But it may show that the markup isn’t ideal, that sites may implement it in self-serving ways or may not implement it correctly. Or correctly enough for it to be used as an entity fact.”

Another interesting takeaway, Kohn says, is the “people” part of this Knowledge Vault process.

“This is specified in the paper, and lends a bit more fuel to the idea that Google wants to have some sort of author or expertise-based ranking, but wants to do it through entities and not explicit markup.”

Finally, Kohn says, there’s an element of link prediction and “how Google is able to better flesh out entities by understanding what types of facts they should expect to find. For each person they expect to find potential facts such as ‘spouse,’ ‘date of birth,’ ‘place of birth,’ ‘gender,’ ‘parents,’ ‘children,’ etc. Being able to generate these link predictions and seek them out automatically is extremely powerful.”

What does this mean for the average search marketer? Kohn says that first and foremost, “entities are the way in which Google is going to improve search results for the foreseeable future.”

Secondly, Kohn says, “Much of this information is finding its way into Knowledge Panels. So those sites that can connect their content to the Knowledge Vault may be surfaced in Knowledge Panels in the future. “