Many terms are used within the search industry. These words all have very specific meanings when it comes to search engine optimization (SEO) and how you implement your strategy. However, many of these terms are often used incorrectly.

Sometimes this misuse causes no issues. After all, a rose by any other name and all.

However, there are other times where not properly understanding these terms can lead to making mistakes that could cost you in the long run, in terms of traffic, position, and conversions.

So let’s look at some of the most commonly misunderstood terms in Google search.

1. Robots.txt

Common Sense Misinterpretation

Most people think the robots.txt file is used to block content from the search engines.

This isn’t how robots.txt works.

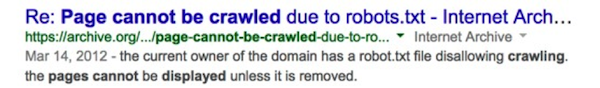

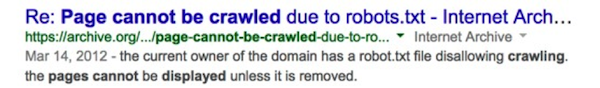

A robots.txt is used to stop a page or part of a site from being crawled and indexed, but not the URL(s) itself. So site owners wind up with this listing in Google’s search results:

What Does Google Say?

Erroneously, webmasters and site owners will add pages and folders to their robots.txt file, thinking this means that page won’t get indexed. Then they see the URL in Google SERPs and wonder how it got there.

Erroneously, webmasters and site owners will add pages and folders to their robots.txt file, thinking this means that page won’t get indexed. Then they see the URL in Google SERPs and wonder how it got there.

Robots.txt won’t prevent the URL from being indexed, just the page content. If Google knows about the page from a link or other method, it will index the URL along with the infamous “page cannot be displayed” description.

So How Do You Prevent a Page From Being Indexed?

To prevent a page or set of pages from having their URL indexed you will want to remove the page from the robots.txt and add a noindex tag to the header area of the page(s) you wish to block. If you have the specific page in the robots.txt, the spider won’t read the page and this means your noindex tag remains unseen by Google and the URL is very likely to get indexed.

From Google:

“To entirely prevent a page’s contents from being listed in the Google web index even if other sites link to it, use a noindex meta tag or x-robots-tag. As long as Googlebot fetches the page, it will see the noindex meta tag and prevent that page from showing up in the Web index. The x-robots-tag HTTP header is particularly useful if you wish to limit indexing of non-HTML files like graphics or other kinds of documents.”

Related Resources

2. Google DNS

Common Sense Misinterpretation

Many people mistakenly put their development site up on a server without proper noindex tags or log-in controls and assume that Google can’t find the site unless there is a link to it.

What Does Google Say?

Google is a DNS and registrar. This means that Google knows about sites as they come online. It actually functions as a “recursive name server, providing domain name resolution for any host on the Internet.”

So this one is simple. If you’re starting a new site or something you don’t want indexed by Google, use log-in controls (to be completely safe) or noindex/nofollow tags. This and the HTTP header noindex are the three methods that will keep your URLs out of the index.

Though remember to take them off when the site goes live – you would be surprised how often that people forget and wonder later why they aren’t live.

3. Penguin, Panda, Penalties

Common Sense Misinterpretation

Many site owners think there is such a thing as a Panda or Penguin penalty. This is understandable because they do cause your site to suffer loss, sometimes tremendous loss, when applied.

However, the only Google penalties are manual actions. So what are Penguin and Panda?

What Does Google Say?

Google doesn’t use the word “penalty” with these releases and prefers the term algorithm update. In fact, if you’re talking to a Google engineer, they will correct you by telling you the only penalties are in your Manual Action Viewer.

Though they feel like penalties and are often referred to as such, Penguin and Panda are algorithm changes and the damage done to a site by these updates is sometimes referred to as an “algorithm shift,” not a penalty.

In layman’s terms, however, if it walks like a duck and you lose 50 percent of your business overnight, then go ahead and use the word penalty, just make sure there isn’t a Google engineer nearby to correct you.

4. Duplicate Content Filter

Common Sense Misinterpretation

Many people mistake duplicate content to mean copying the exact words and paragraphs on a page to a new page. They also think it’s a penalty. It is neither.

Google has a much more sophisticated way to determine whether content is “duplicate” and when they do find two copies of the same thing, they filter one out. Again, though, if you lose 50 percent of your content overnight, feel free to use penalty, just know that officially it is called a filter and is meant only to prevent a user from receiving poor search results.

What Does Google Say?

(Disclaimer: This information is from a Google patent and may differ in some of its real world application.)

So duplicate content is just taking the words from one page to another, and then Google sees this and filters out one of the results, right? Well, not exactly. Google calls this the “naïve method” for comparing documents.

So what does Google do? According to their original patent (US 8015162 B2), detecting duplicate and near-duplicate files detecting duplicate content started by utilizing two algorithms (Broder and Charikar) that worked by identifying document fingerprints, a method developed by M. Rabin.

From Google:

“In both the Broder and Charikar algorithms, each HTML page is converted into a token sequence. The two algorithms differ only in how they convert the token sequence into a bit string representing the page.

To convert an HTML page into a token sequence, all HTML markup in the page is replaced by white space or, in case of formatting instructions, ignored. Then every maximal alphanumeric sequence is considered a term and is hashed using Rabin’s fingerprinting scheme (M. Rabin, “Fingerprinting by random polynomials,” Report TR-15-81, Center for Research in Computing Technology, Harvard University (1981), incorporated herein by reference) to generate tokens, with two exceptions.

Both algorithms generate a bit string from the token sequence of a page and use it to determine the near-duplicates for the page”

The act of generating fingerprints for each document may be effected by (i) extracting parts (e.g., words) from the documents, (ii) hashing each of the extracted parts to determine which of a predetermined number of lists is to be populated with a given part, and (iii) for each of the lists, generating a fingerprint.

This system eventually was determined to be too intensive in terms of both resources and money, so a new method was developed. Now, from the most recent patent:

*Note the word “keyword shingles” comes from the Rabin fingerprinting scheme “Rabin fingerprints, which results in a sequence of (n−k+1) fingerprints, called ‘shingles’.” (Part of the algorithm.)

Improved Method

Detecting duplicate and near-duplicate files

US 7366718 B1

This method takes the concept of fingerprinting a step further and adds clusters and other algorithms such as PageRank, Freshness, etc.

From Google:

“Improved duplicate and near-duplicate detection techniques may assign a number of fingerprints to a given document by (i) extracting parts from the document, (ii) assigning the extracted parts to one or more of a predetermined number of lists, and (iii) generating a fingerprint from each of the populated lists. Two documents may be considered to be near-duplicates if any one of their fingerprints match.”

These lists are clusters of nearly identical documents. As explained further, in the patent:

The present invention may function to generate clusters of near-duplicate documents, in which a transitive property is assumed. Each document may have an identifier for identifying a cluster with which it is associated. In this alternative, in response to a search query, if two candidate result documents belong to the same cluster and if the two candidate result documents match the query equally well, only the one deemed more likely to be relevant (e.g., by virtue of a high PageRank, being more recent, etc.) is returned.

The filter is taken one step further. The page may not be indexed. This has been seen in the wild especially with certain types of negative SEO.

The present invention may also be used after the crawl such that if more than one document is near duplicates, and then only one is indexed. The present invention can instead be used later, in response to a query, in which case a user is not annoyed with near-duplicate search results.

Interestingly enough it may also be used to “fix broken links.”

The present invention may also be used to “fix” broken links. That is, if a document (e.g., a Web page) doesn’t exist (at a particular location or URL) anymore, a link to a near-duplicate page can be provided.

Now the patent doesn’t cover this last part, but it is worth noting. As some may know, if you move your content to a new site and it is very similar (or exactly the same) as another site and Google determines these are the same sites, your old links may be forwarded to your new site. In the case where these links were penalized, the penalty transfers as well.

So basically, the new method clusters sites that may be identical or nearly identical and then based on whether or not a page is rejected or not rejected the page may be delisted or not indexed (rejected) or filtered out of the query (not rejected). Either way, you are not there and your site is not getting traffic.

Tip: If you need to duplicate your content across sites make sure your pages are at least 40 percent different. This isn’t just in wording, but placement of words and how the “shingles” are contextually presented.

However, you should only do this when duplicating content is inevitable. The best advice here would be, don’t duplicate content across sites because just what 40 percent would you change?

5. PageRank

Common Sense Misinterpretation

A number from 0 to 10, assigned by Google, indicating how well your site is doing in terms of the algorithm.

So this is not what PageRank is, nor is it really valuable. PageRank is just a public facing value with little real meaning other than 0 is bad, 10 is great, and if you are going down the scale it is a negative and up the scale it is a positive.

What Does Google Say?

First, that the PageRank you see and what Google uses are two different things. The one you see is called “Toolbar PageRank.” Second, reported PageRank is going away over time, so the updates are further and further apart. Google thought people got too “hung up” on the measure, so they are taking it away to refocus site owners on metrics it thinks are better.

Now What Is It?

It is about the links to your site. PageRank is only looking at the links and quality of those links, not content or other factors. (See Matt Cutts’ video below.) It is just an indicator of strength, not an absolute measure.

From Google:

“PR(A) = (1-d) + d (PR(T1)/C(T1) + … + PR(Tn)/C(Tn))

where PR(A) is the PageRank of page A, PR(Ti) is the PageRank of pages Ti which link to page A, C(Ti) is the number of outbound links on page Ti and d is a damping factor which can be set between 0 and 1.

So, first of all, we see that PageRank does not rank web sites as a whole, but is determined for each page individually.”

Learning Google

Learning to speak SEO (and Google) in many ways is like learning a new language. Understanding what the terms accurately mean goes a long way toward making sure you don’t mistakenly apply a strategy to your site in a way you didn’t mean to implement. The definitions can even differ from engine to engine.

Learning to speak SEO (and Google) in many ways is like learning a new language. Understanding what the terms accurately mean goes a long way toward making sure you don’t mistakenly apply a strategy to your site in a way you didn’t mean to implement. The definitions can even differ from engine to engine.

There are many other terms to learn beyond just these five. These were picked because they are often some of the most commonly misused and misapplied.

As confusing as it can often be, keeping up with the terminology and how it is being applied today can mean the difference between success and accidental failure. And keeping up means knowing what it means today versus yesterday because as search evolves, so does the terminology.

So when in doubt, look it up. I think there are these things called search engines. Give one a try.

Erroneously, webmasters and site owners will add pages and folders to their

Erroneously, webmasters and site owners will add pages and folders to their

Learning to speak SEO (and Google) in many ways is like learning a new language. Understanding what the terms accurately mean goes a long way toward making sure you don’t mistakenly apply a strategy to your site in a way you didn’t mean to implement. The definitions can even differ from engine to engine.

Learning to speak SEO (and Google) in many ways is like learning a new language. Understanding what the terms accurately mean goes a long way toward making sure you don’t mistakenly apply a strategy to your site in a way you didn’t mean to implement. The definitions can even differ from engine to engine.